Virtualisation - a critical solution demanding critical testing

By Daryl Cornelius, Director Enterprise, Spirent Communications EMEA

Wednesday, 01 August, 2012

The complexity of combined business IT systems has lead to a proliferation of hardware installations. When adding a major new software system, the safest option has often been to install it on its own dedicated server, rather than risk unforeseen problems from resource conflict or software compatibility with other software systems. Thus data centres evolved with ever increasing numbers of servers, many running at as little as 5 to 10% capacity, contributing to the fact that IT power consumption already has a larger carbon footprint than the airline industry.

Meanwhile server performance has soared, and now multisocket, quad-core systems with 32 or more gigabytes of memory are the norm. With engines of such power available, it no longer makes sense to proliferate underworked servers. There is a growing need for consolidation, and the development of new ‘virtualisation’ technologies allows multiple independent workloads to run without conflict on a smaller number of servers.

Virtualisation can be seen as an integration of two opposing processes. On the one hand a number of separate servers can be consolidated so that software sees them as a single virtual server - even if its operation is spread across several hardware units. On the other hand a single server can be partitioned into a number of virtual servers, each behaving as an independent hardware unit, maybe dedicated to specific software applications, and yet bootable on demand at software speeds.

This means that IT departments are now able to control server sprawl across multiple data centres, using a combination of multicore computing and virtualisation software such as VMware Virtual Infrastructure combined with hardware support from Intel Virtualisation Technology (IVT) and AMD Virtualization (AMD-V). Fewer servers are now required and server utilisation levels have increased - allowing greater energy efficiency and lower power and cooling costs in addition to savings from reduced capital investment.

This virtualisation process applies not just to the processing function, but also to storage. Massive data storage can be safely located in high-security sites and replicated for added disaster proofing, while high-speed networking maintains performance levels matching local storage.

The flexibility of virtualisation - its ability not just to consolidate hardware but also to allow the processing power to be split into virtual servers as required - brings further advantages as it decouples the application environment from the constraints of the hosting hardware. Disaster recovery becomes simpler, more reliable and cost effective, as systems work around individual hardware failure by automatically diverting the load to other virtual servers. Virtual desktop environments can use centralised servers and thin clients to support large numbers of users with standard PC configurations that help to lower both capital and operating costs. Virtualisation allows development, test, and production environments to coexist on the same physical servers while performing as independent systems. Because virtualisation safely decouples application deployment from server purchasing decisions, it also means that virtual servers can be created for new applications and scaled on demand to accommodate the evolving needs of the business.

In addition to these benefits within any organisation, virtualisation also offers a new business model for service providers. They can outsource customer applications and locate any number of these services within their regional data centres, each in its own well-defined and secured virtual partition.

A question of bandwidth

Virtualisation typically means that a large number of servers running at just 5-10% capacity have been replaced by fewer, more powerful servers running at 60% or higher capacity. As with any IT advance, the resulting benefits also lead to greater usage, and this puts pressure on the network.

The typical I/O arrangement for a server has been to provide Gigabit Ethernet channels to the LAN plus Fiber Channel links to storage - the difference being that traditional ethernet tolerates latency delays and some loss of frames that would be unacceptable for storage access, so provision is made for lossless Fibre Channel links. With the server running at six or more times the load, the I/O ports are under pressure. Adding further gigabit network interface cards is costly because every new card adds to server power consumption, cabling cost and complexity, and increases the number of access-layer switch ports that need to be purchased and managed. In addition, organisations often must purchase larger, more costly servers just to accommodate the number of expansion slots needed to support increasing network and I/O bandwidth demands.

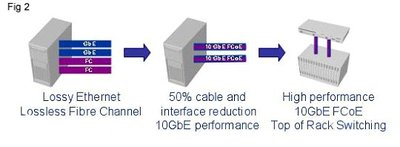

Figure 1 illustrates the size of the problem in a big data centre. The solution lies in 10Gigabit Ethernet - fast enough to serve not only I/O to the LAN but also SAN (storage area network) and IPC (inter processor communication) needs. Instead of four to eight gigabit cards in each server, just two 10 Gig cards offer full redundancy for availability plus extra room for expansion. Ethernet losses can be avoided using FCoE (Fibre Channel over Ethernet) that preserves the lossless character and management models of Fibre Channel. Another solution lies with new developments in ethernet. Just as Carrier Ethernet has extended the ethernet model to become a WAN solution, so the variously named Converged Enhanced Ethernet (CEE) or Data Centre Ethernet (DCE) is now available to serve SAN and IPC demands.

Figure 2 summarises this evolution in the data centre - from GbE plus FC to 10 GbE ports and onward to racks of state-of-the-art blade servers connected via 10 GbE to a high-speed switch. A large data centre could have thousands of such servers, requiring a new generation of powerful low latency, lossless switching devices typified by Cisco’s Nexus 5000 offering up to 52 10 GbE ports or their massive 256-port Nexus 7000.

The testing imperative

Network performance and reliability have always mattered, but virtualisation makes them critical. Rigorous testing is needed at every stage - to inform buying decisions, to ensure compliance before deployment, and to monitor for performance degradation and anticipate bottlenecks during operation. But today’s data centres pose particular problems.

Firstly, the problem of scale. In Spirent’s TestCentre this is addressed by a rack system supporting large numbers of test cards, including the latest HyperMetrics CV2 and 8-port 10GbE cards, to scale up to 4.8 terabits in a single rack.

A second problem involves measuring such low levels of latency, where the very presence of test equipment produces delays that must be compensated for. Manual compensation is time consuming and even impossible in some circumstances, whereas in the Spirent system described this compensation is automatic and adjusts according to the interface technology and speed.

Latency compensation is just one example of the specific needs for testing either FCoE or DCE/CEE switching capability. Spirent TestCenter with HyperMetrics is used to test both and was the system of choice when Network World last year put Cisco’s Nexus 7000 to its Clear Choice test. The test addressed six areas - availability, resiliency, performance, features, manageability and usability. It was the biggest test Network World or Cisco’s engineers had ever conducted, but is a sure sign of the way things are going.

Virtualisation has largely driven the development of 10GbE, and it will remain the technology of choice for the near future, even though the first 40GbE-enabled switches are predicted for 2010 to provide even faster throughput between switches and, to meet the growing appetite for backbone bandwidth, work is already underway on 100GbE switches for service providers. As speed goes up, cost comes down, with Intel’s ‘LAN on motherboard’ building 10GbE into the very foundation of the server.

Solving the connectivity problem for smart apartments

In a new report, the Wireless Broadband Alliance (WBA) has cautioned against the fragmentation of...

Luminous running trail utilises IoT tech, wins awards

The Jinji Lake Luminous Trail, a project developed by China's Suzhou Industrial Park, has...

Are we dangerously dependent on submarine cables?

A new study warns that submarine communication cables are increasingly vulnerable to both natural...